OVERVIEW

Anti-Apocalypse combines a video database of appropriated web content re/assembled as animated loops, electroencephalograph (EEG) biofeedback, and custom software to create an immersive cinematic experience that can be altered by the viewer’s fluctuating brainwave activity.

As participants watch the interactive video unfold in synchronization with their level of in-/attention, they are invited to reflect on contemporary events in an accelerating global crisis; narratives rooted in moral absolutism and collective annihilation; and the underlying forces embodied within the processes through which we co-author our realities.

SCOPE

Theoretical Framework

Influenced in part by Walter Benjamin’s philosophy on the concept of history, and his ideas relating catastrophe, media, and memory into what he referred to as the “optical unconscious”, the core of the project focuses on how manifestations of the image in digital, networked media function as a social un-/conscious or living archive through which these violent, apocalyptic visions re-manifest.

Creative Process

I edited thousands of images and videos that I found on the internet to create a database of content. Most of these clips are short loops, ranging in intensity and duration from sharp, staccato, seizure-inducing animated gifs to slow, long takes of mezmerizing scenes.

Custom Software

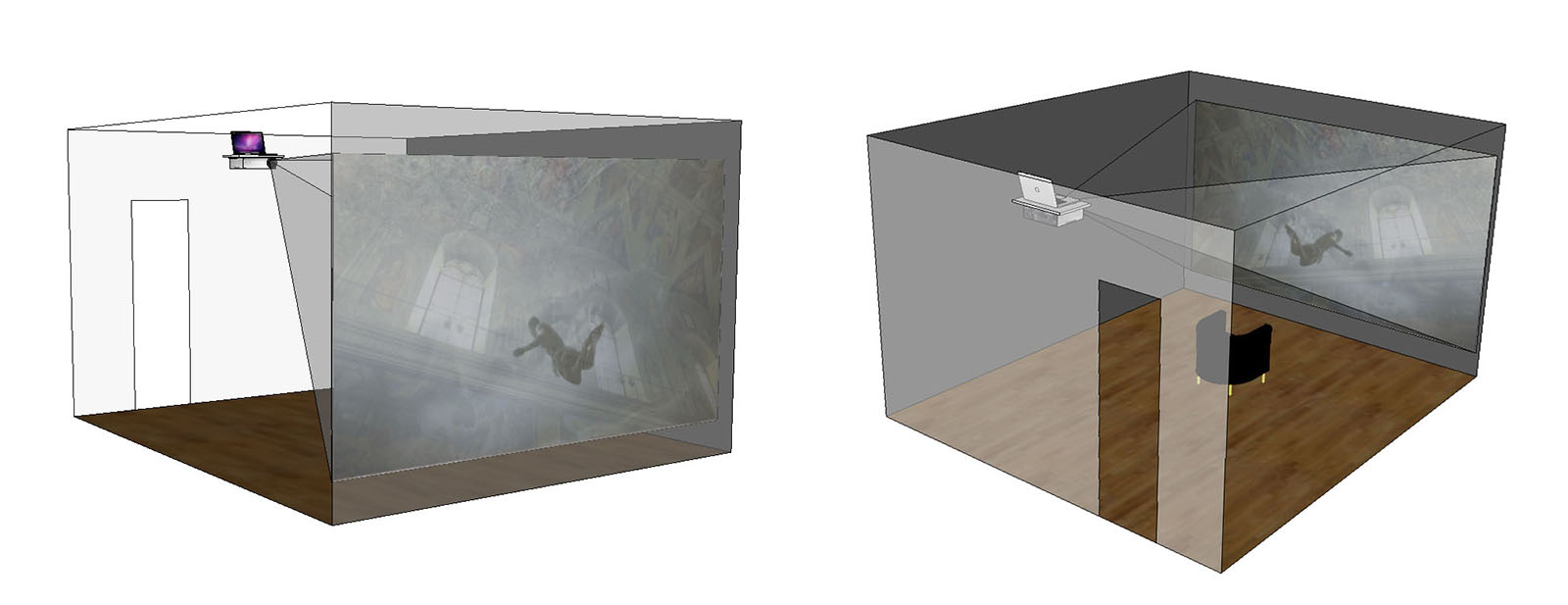

I created a custom program in MAX/MSP/Jitter that functions like a two-channel video mixer set up to crossfade indefinitely. All of the clips in the database have custom properties and are mapped to one another like an intricate labyrinth.

User Experience

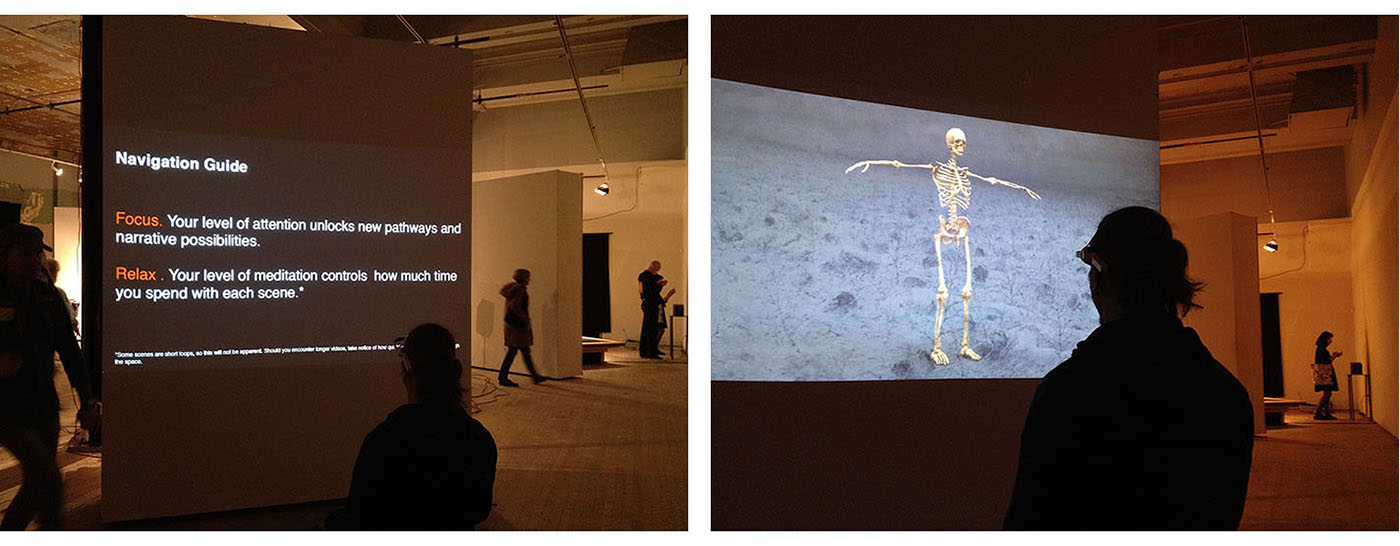

The video mixer is controlled by an EEG brain-computer interface in realtime. As the viewer’s brainwave state shifts in response to the content they are witnessing, they lose or gain access to different parts of the video database, making each “screening” both idiosyncratic and unique.

ROLE

Artist, Programmer, Researcher, Writer

This was my graduate thesis project, completed at the University of California Santa Cruz. I was advised by Warren Sack, Irene Gustafson, Vilashini Cooppan, Jennifer Gonzalez, and Ralph Abraham.

PROCESS

CREATIVE PROCESS

For a period of 18+ months, I created and curated a video library of content aggregated from image searches, databases, archives, directories, chatrooms, social media, every corner of the internet. I edited these images and videos by running them through different filters, cutting them up, remixing them, and trimming them to produce short seamless loops. Each video clip is tagged and sorted into categories illustrative of archetypes of the unconscious or experiences in human life that make up the human condition, creating a taxonomy of sorts, like a tarot.

When I started this project, I had just seen Brion Gysin’s Dream Machine, and was experimenting with different stroboscopic projection and brainwave entrainment techniques. Stroboscopic projection overstimulates the photoreceptors of the eyes, causing them to lose sensitivity and produce a series of afterimages. Gray Walter, who conducted early experiments with stroboscopic projection found that in some subjects, specific flicker frequencies triggered “overpowering vivid memories of past experiences”, a sense that time itself could become “lost or disturbed”, and the sensation of being “pushed sideways in time” by the flicker - “Yesterday was no longer behind, and tomorrow was no longer ahead”.

Also deeply influential to my process was Trinh Minh-ha’s The Digital Film Event, in which she proposes a way of working with the digital image that does away with the illusion of the 3rd dimension by producing flat 2D images that open up to the 4th and 5th dimensions of time and spirit. In spiritual practice, 4D is the dimension of light - “light not as the opposite of darkness, but light within darkness”.

My goal with all of this was to create an experience for the viewer that was a meditation on the image itself, but perhaps more importantly, where the act of witnessing was pointed inwards, instead of out – and where the ambiguity and artificial borders between what’s “out there”, in the world, on the screen, and what’s felt and experienced at the site of the body and our senses completely collapses.

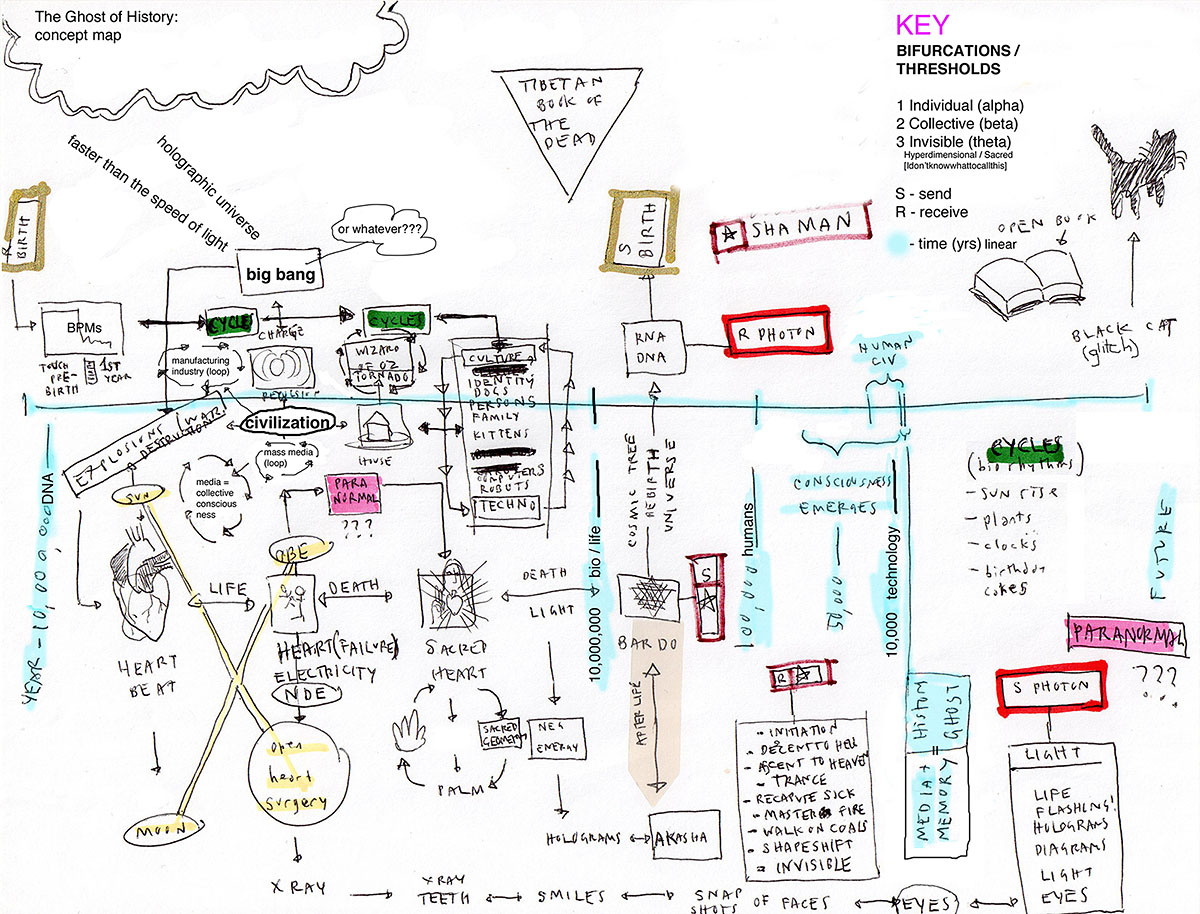

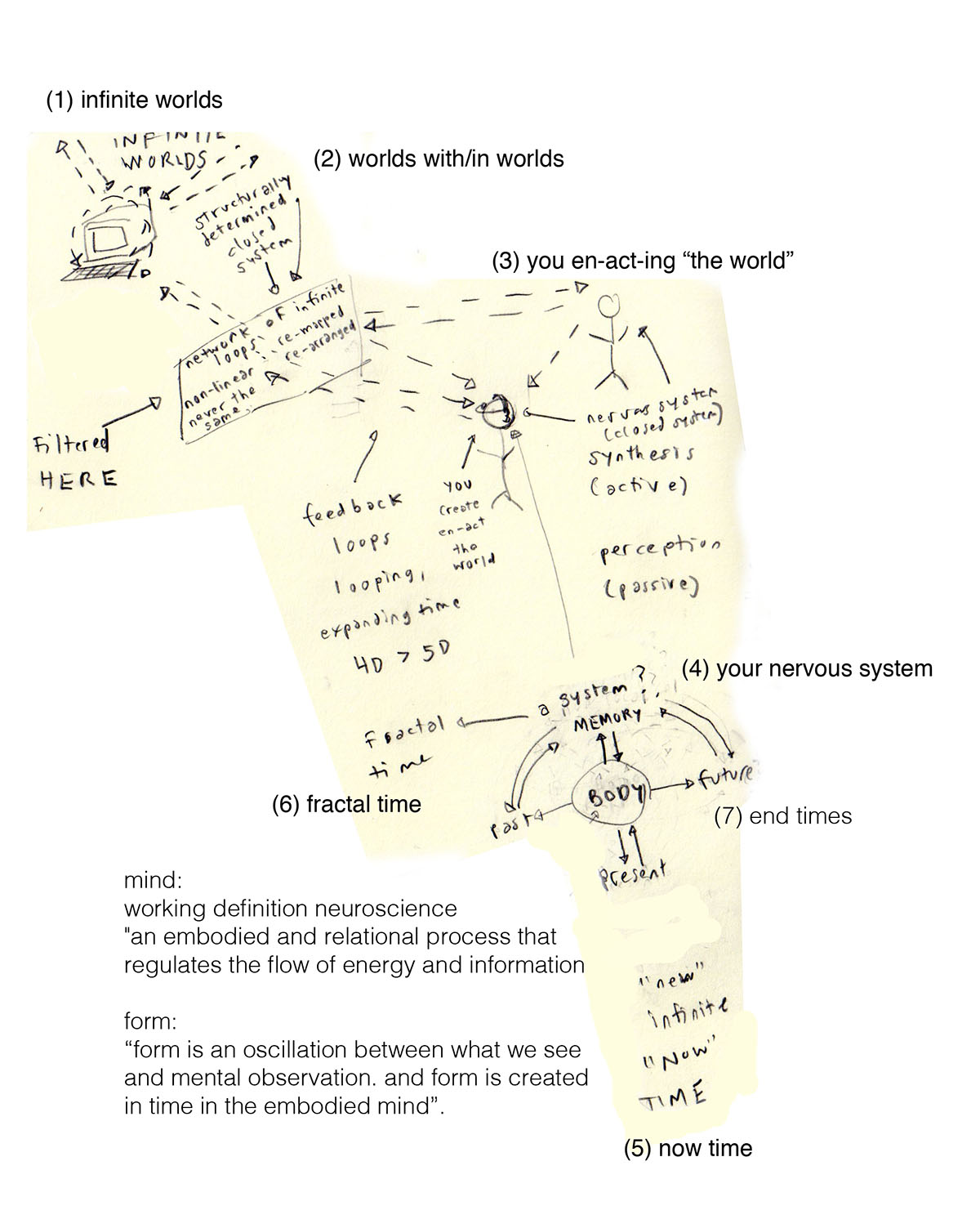

Early concept map, under the working title, 'Ghost of History' Early test sequence

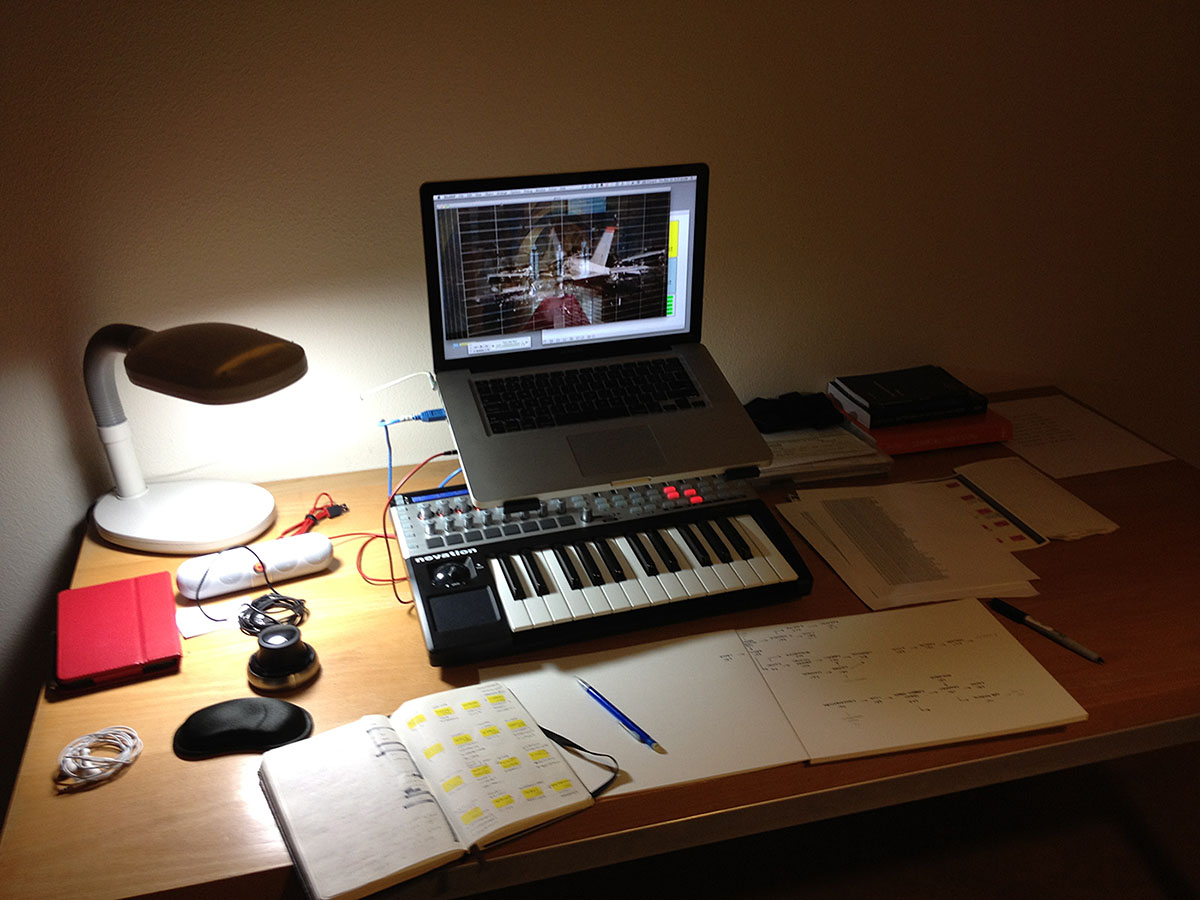

My desk at SFAI, Santa Fe, NM

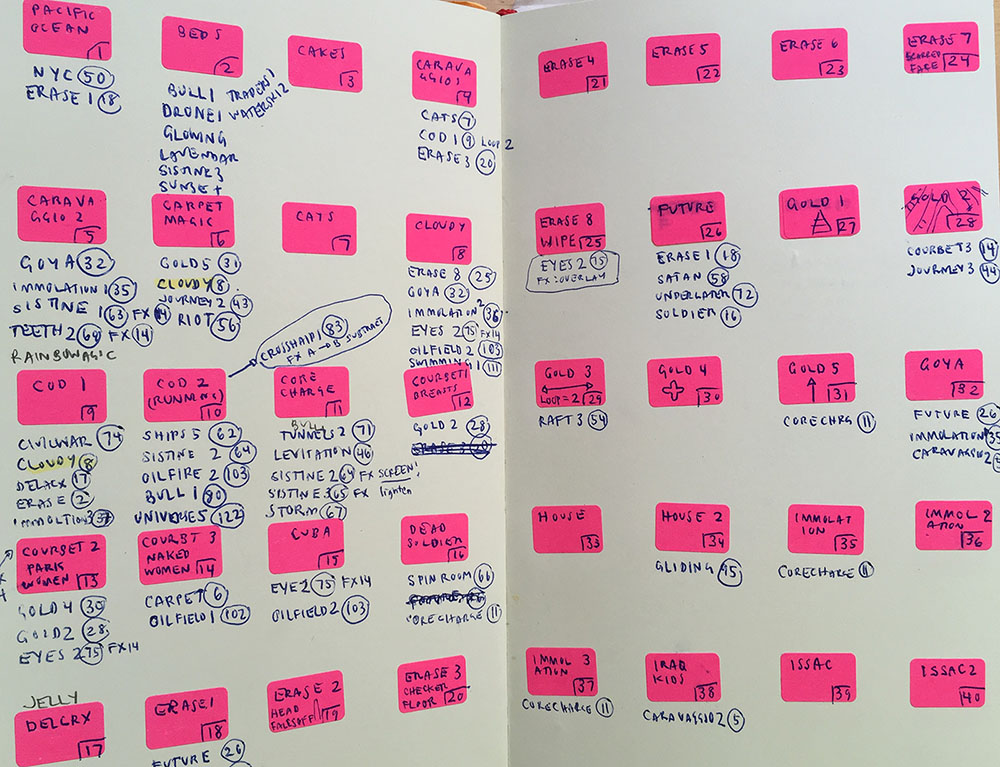

My process for categorizing and mapping clips

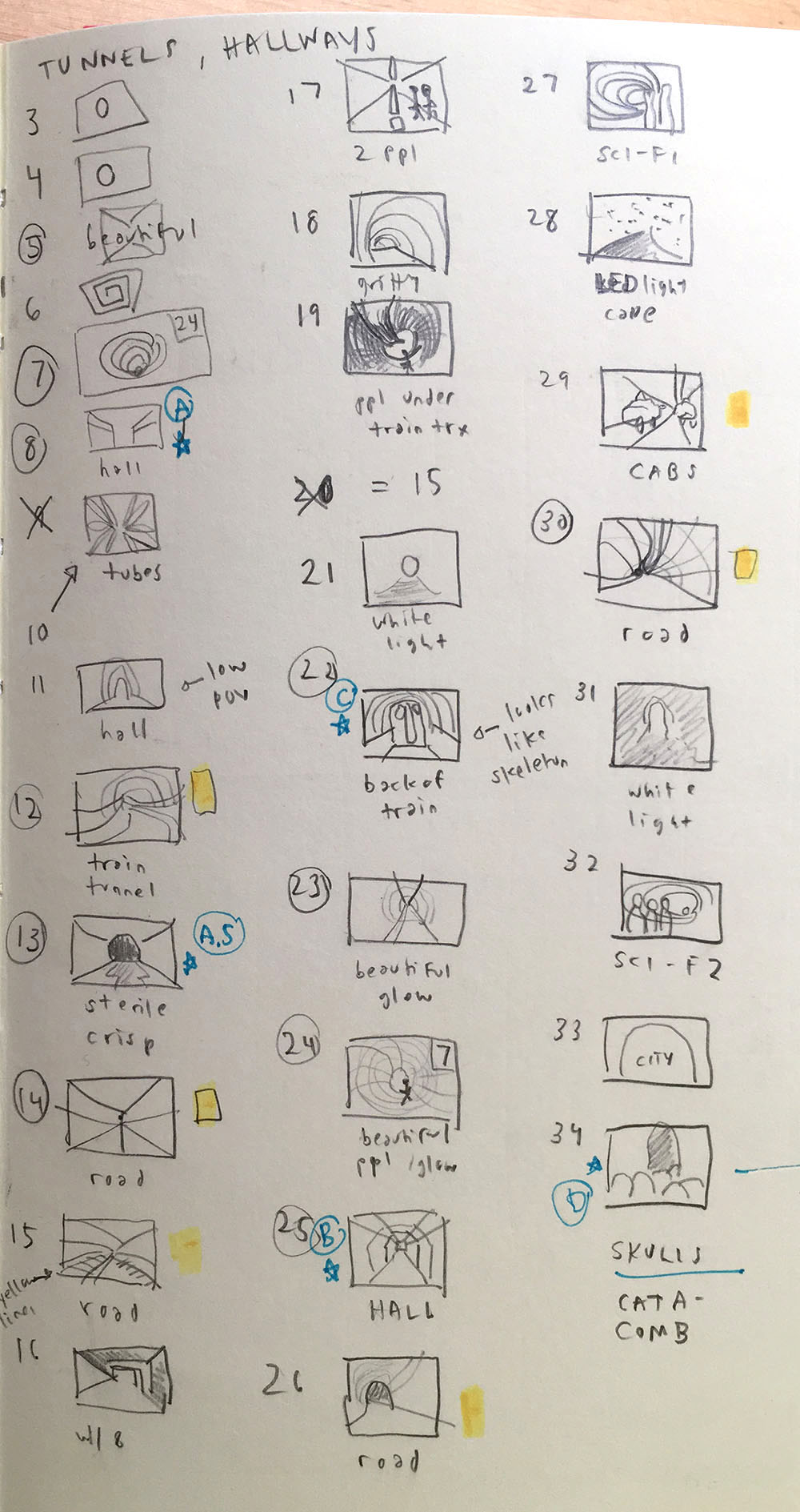

My process for creating loops, frame by frame

Three-frame loop, Turner paintings MAKING CUSTOM SOFTWARE

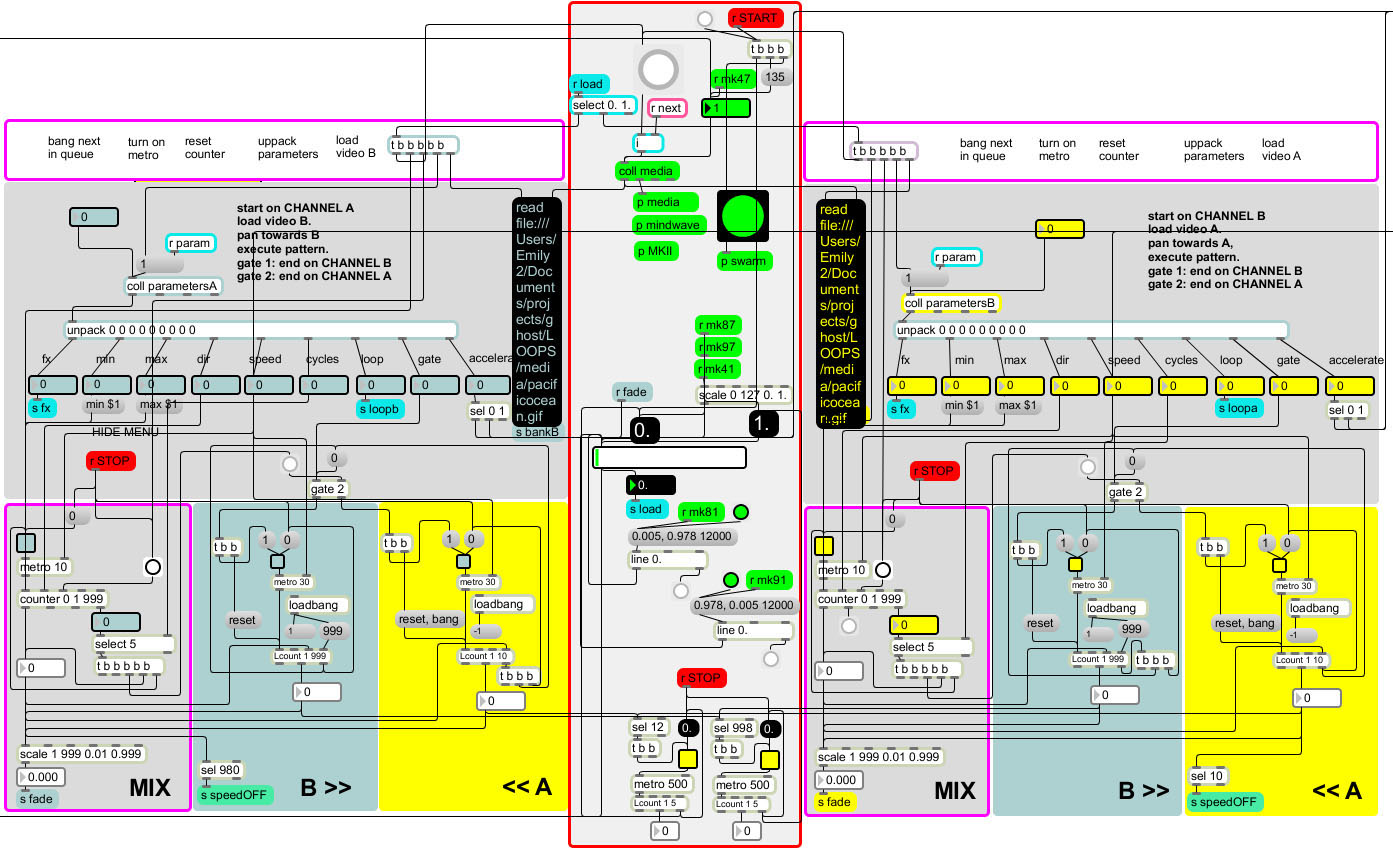

I wrote the program for the EEG-controlled video mixer in MAX/MSP/Jitter. The program is composed of the mixer (a.k.a. the “main patch”) and three “subpatchers”, or modules: a video database subpatcher, an EEG interface subpatcher, and an audio subpatcher.

Video Mixer (Main Patch)

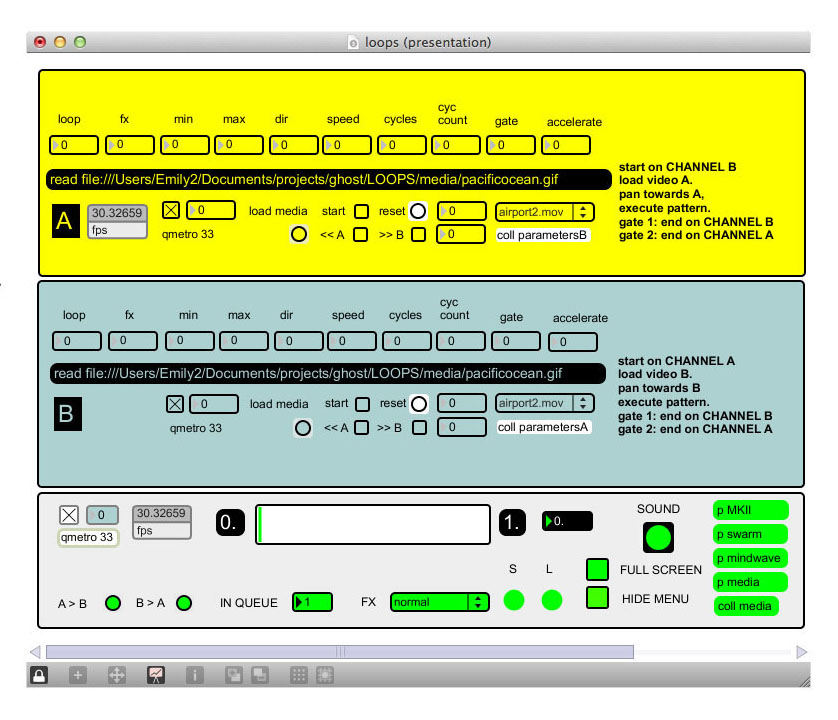

The main patch consists of a custom two-channel video mixer set to crossfade indefinitely (i.e. always panning from channel A to channel B and back). Incoming “attention” data controls which clips are triggered. Incoming “meditation” data controls how fast or slow the transition between two clips occurs. Theoretically, the video can go on forever.

Main patch UI, presentation view

Main patch, code view Video Database (Subpatcher)

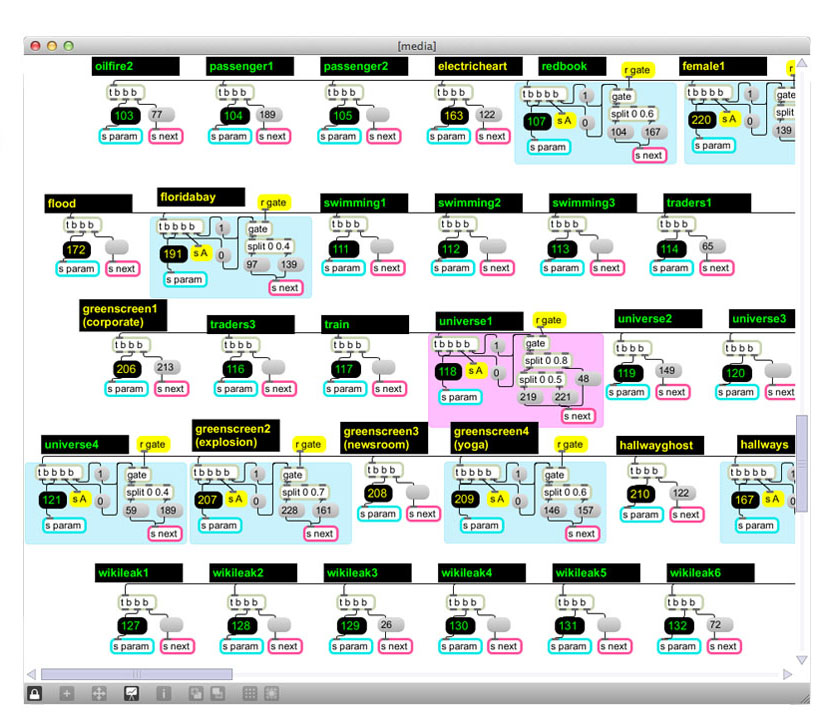

The video database subpatcher holds a carefully choreographed database of over 300 video clips. Each clip is mapped to a unique set of playback parameters that determines the frame rate, direction, looping parameters, start/stop points, fx, as well as, next in cue. Some clips serve as “revolving doors”, where one of several clips can be triggered based on the user’s level of “attention” when they reach that particular scene. As a general rule, short, abrupt, representational scenes are mapped to low attention thresholds, while more abstract, non-representational or durational sequences are reserved for higher or more sustained levels of attention.

Video database subpatcher, code view EEG Interface (Subpatcher)

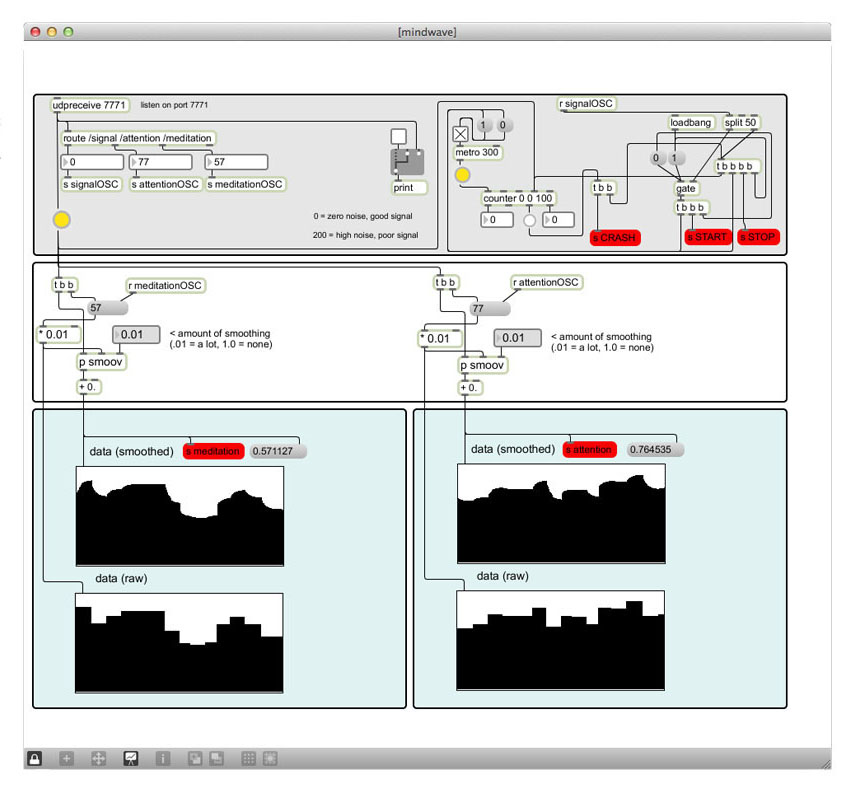

The EEG interface subpatcher listens for incoming data from the Neurosky Mindwave via the BrainWaveOSC library. It is set to capture new data once per second. It also smooths “attention” and “meditation” data, and routes the converted streams back to the main patcher. “Signal” data is used to automatically start and stop the piece upon successful dis/connection with the EEG device, as well as to catch errors and restart in the event of a crash.

EEG subpatcher, code view THEORETICAL FRAMEWORK

I wrote a manifesto about the whole thing.

A "groundless" cosmology of time and be-ing I begin with the premise that the distinction we make between the representational and the abstract is never settled. It is a negotiation between visual perception, which is largely determined by culture, and mental observation, which steps in to fill in the blanks with what the visual projection area of the brain determines sufficient to deliver a seamless coherent experience bound in time.

From there, I ask:

Is the neurological process of temporal binding the foundational process for world-making, given that the “I” of identity is born of this process, all of our stories and narratives are shaped by this process, as are our cultural myths? In other words, would there be a you or a me or worldview without time-binding?

What if the persistence of time and the persistence of the image are really one in the same? How does the mode of transmission, or medium, with which we view these images shape how we perceive and create our realities? And how does the digital, networked image produce or disrupt the ways in which we self-identify and shape our worlds – worlds now heavily mediated by digital screens? Can representations be multiplied to infinity? Where is the ground of reality in this global network of machines and infinite representations?

Turning to the internet for answers, I hunt for patterns in thousands of image search queries. Of particular interest are patterns evidencing that the processes of meaning/identity making and narrative structuring are taking on new, novel, multiple forms in-and-because of their digitalness.

I filter my searches for things that are being shared, reinterpreted, and proliferated on a massive scale. I find these things to be predominantly: cute baby animals, porn, violent catastrophe, and memes. I sample many of these images in the project.

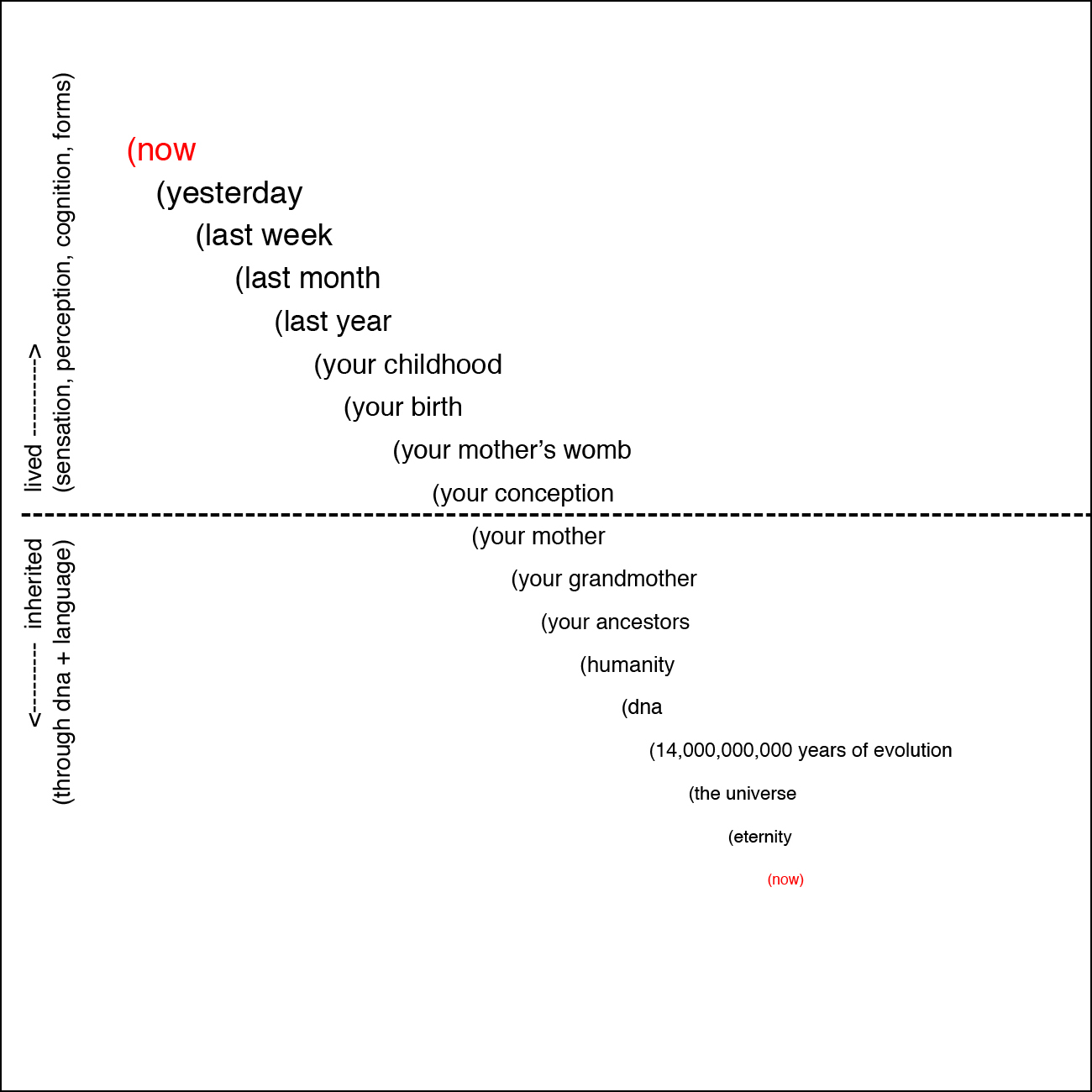

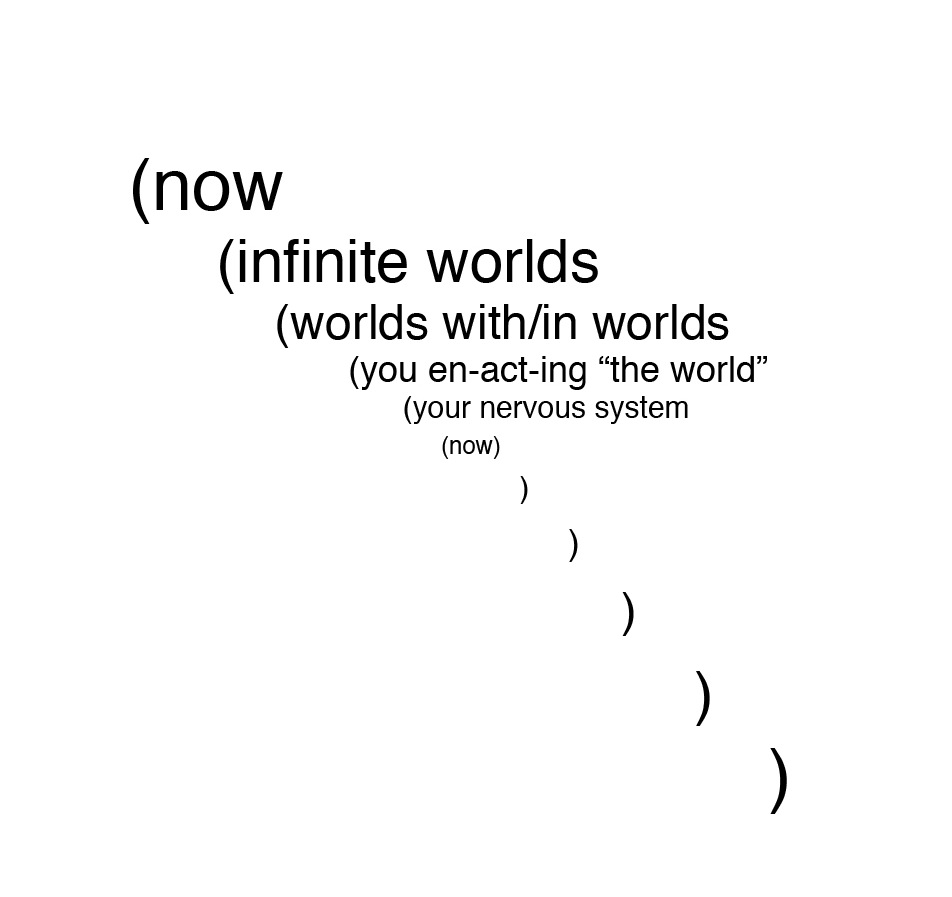

I also consider three models of time and temporality: linear historical time (end times), the temporality of memory and remembering (fractal time), and the here-and-now embodied present moment (now time):

End Times

Fractal Time (memory events), my interpretation

Now Time ( ), my interpretation Other framing questions and concepts:

The problem with representation

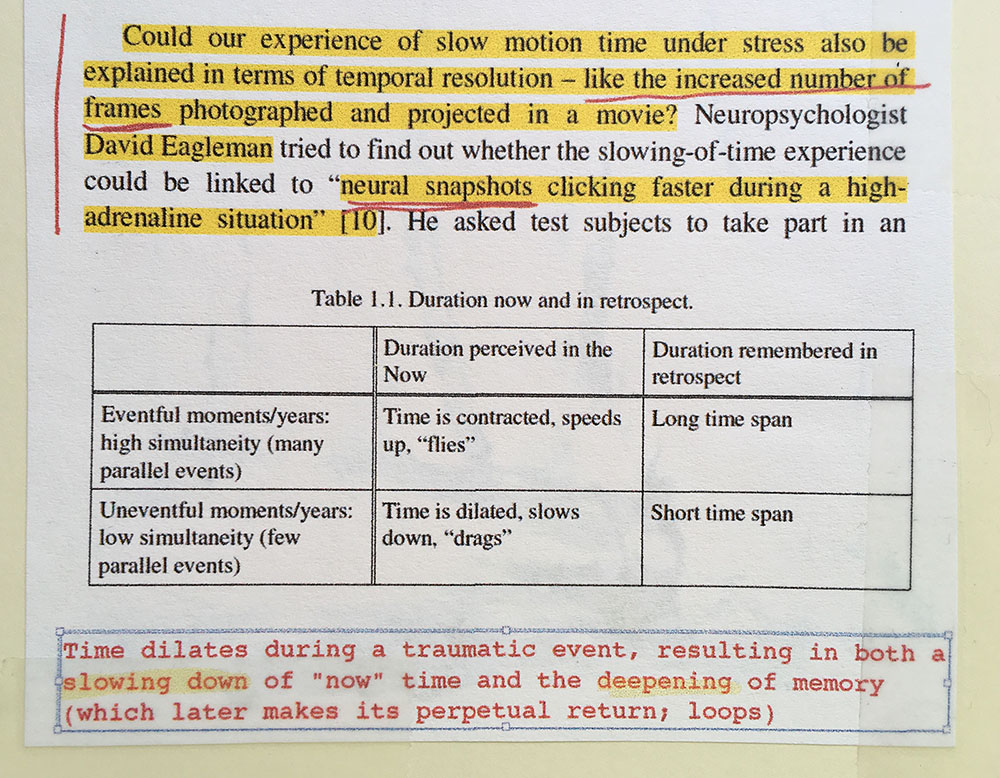

Theory of time dilation during traumatic events

The role of visual long term memory in structuring episodic memory OUTCOME

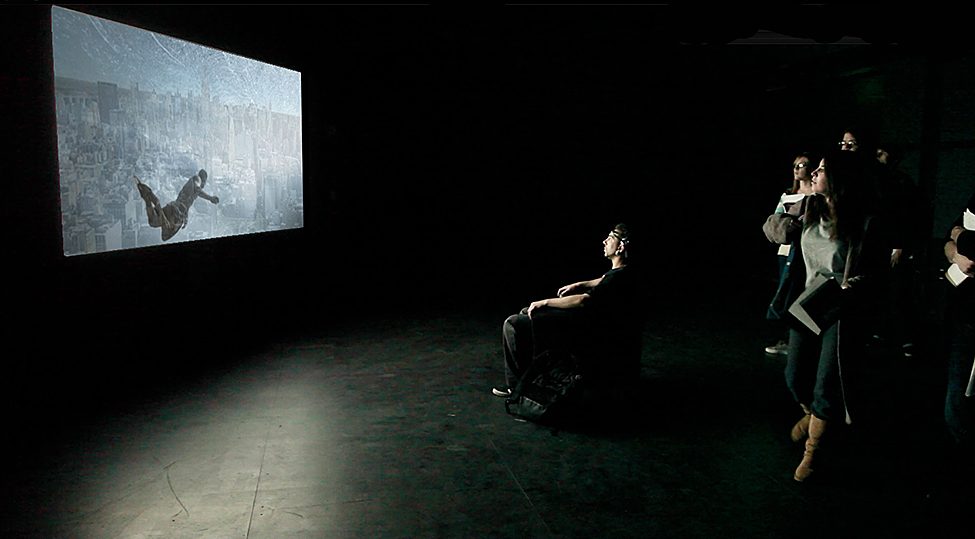

Digital Arts Research Center, UCSC, Santa Cruz, California

The Lab, San Francisco, CA

PROJECT CV

AWARDS

Sole recipient of a two-month residency at SFAI, awarded by CURRENTS New Media Festival, Santa Fe, NM (2013)

Shortlist selection (10 projects were selected from over 420 works), Celeste Prize for beyondmemory, Florence, Italy (2012)

EXHIBITIONS

The Lab, San Francisco, California (2014)

LAST Festival, ZERO 1 Garage, San Jose, California (2014)

Currents International New Media Festival, Santa Fe, New Mexico (2013)

Outcasting Fourth Wall Festival, Cardiff, Wales (2012)

Athens Video Art Festival, International Festival of Digital Arts & New Media, Athens, Greece (2012)

beyondmemory, Florence, Italy (2012)

Digital Arts Research Center, University of California Santa Cruz, Santa Cruz, California (2012)

PRESS

“The LAST Festival”, Leonardo Journal, MIT Press, Vol 48 Issue 2 (April 2015)

“The Future is Here, That Much is Clear”, THE Magazine (August 2013)

“Going Digital in the Desert”, Filmmaker Magazine (June 22, 2013)

Interview, “Gamers on Game”, KZSC Radio, Santa Cruz, CA (March 26, 2012)